What is Web App Pentesting? (Part Two)

I’m Matt Dunn, a lead penetration tester at Raxis. This is the second of a two-part series, aimed at explaining the differences between authenticated and unauthenticated web application testing. I’ll also discuss the types of attacks we attempt in each scenario so you can see your app the way a hacker would.

Although some applications allow users to access some or all their functionality without providing credentials – think of simple mortgage or BMI calculators, among many others – most require some form of authentication to ensure you are authorized to use it. If there are multiple user roles, authentication will also determine what privileges you have and/or what features you can access. This is commonly referred to as role-based access control.

As I mentioned in the previous post, Raxis conducts web application testing from the perspectives of both authenticated and unauthenticated users. In authenticated user scenarios, we also test the security and business logic of the app for all user roles. Here’s what that looks like from a customer perspective.

Unauthenticated Testing

As the name suggests, testing as an unauthenticated user involves looking for vulnerabilities that are public-facing. The most obvious is access: Can we use our knowledge and tools to get past the authentication process? If so, that’s a serious problem, but it’s not the only thing we check.

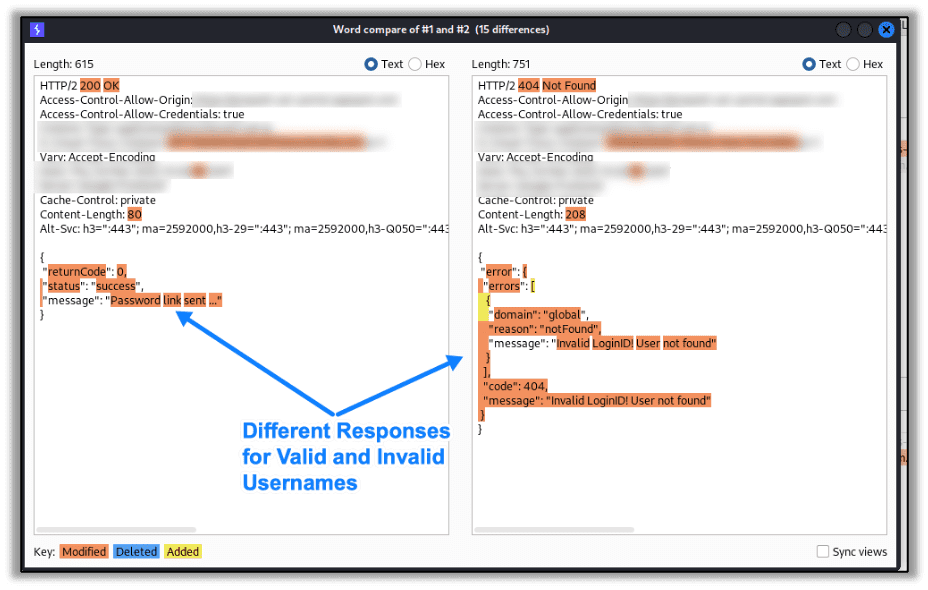

In previous articles and videos, we’ve talked about account enumeration – finding valid usernames based on error messages, response lengths, or response times. We will see what information the app provides after unsuccessful login attempts. If we can get a valid username, then we can use other tools and tactics to determine the password. As an example, see the two different responses from a forgot password API for valid and invalid usernames below:

From an unauthenticated standpoint, we also will try injection attacks, such as SQL Injection, to attempt to break past login mechanisms. We’ll also look for protections using HTTP headers, such as Strict-Transport-Security and X-Frame-Options or Content-Security-Policy, to ensure users are as secure as they can be.

With some applications, we can use a web proxy tool to see which policies are enforced on the client-side interface and which are enforced on the server side. In a future post, we’ll go into more detail about web proxies. For now, it’s only important to know that proxies sometimes reveal vulnerabilities that allow us to bypass security used on the client and allow us to interact with the server directly.

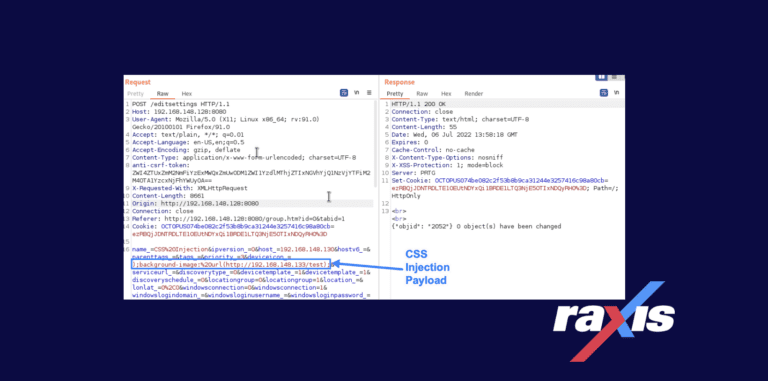

As an example, fields that require specific values, such as an email field, may be verified to be in the proper format on the client-side (i.e. using JavaScript). Without proper safeguards in place, however, the server itself might accept any data, including malicious code, entered directly into that same field. In practice, this can be bypassed, leading to attacks such as Cross-Site Scripting, as shown in my CVE-2021-27956 that bypasses email verification.

Authenticated Testing

During an authenticated web application test, we use many of the same tactics, toward the same ends, as we do with unauthenticated tests. However, we have the added advantage of user access. This vantage point exposes the application to more vulnerabilities due to the expanded surface area of the application. This is why we recommend authenticated testing, to ensure even a malicious user cannot attack the application.

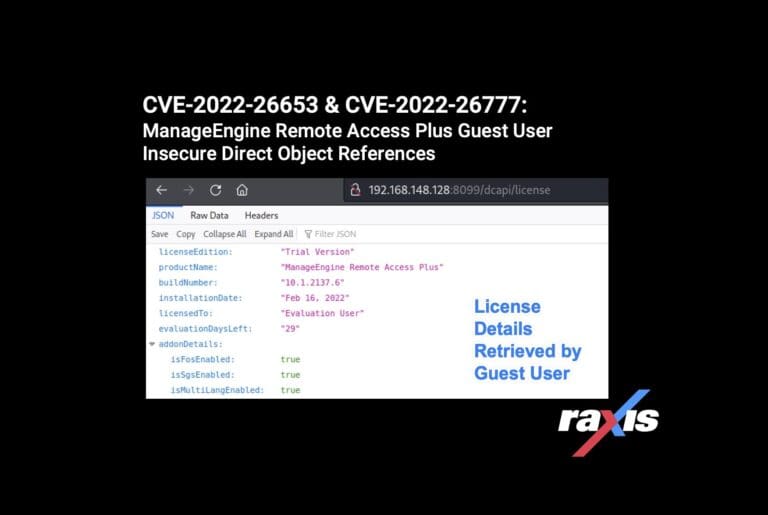

Once authenticated, we attempt is to see if the app restricts users to the level of access that matches its business logic. This might mean we log in with freemium-level credentials and see if we can get to paid-users-only functionality. Or, in the role of a basic user, we may try to gain administrator privileges.

As with an unauthenticated test, we also see how much filtering of data is done at the interface vs. the server. Some apps have very tight server-level controls for authentication but rely on less-restrictive policies once the user is validated.

Though it may seem simple from the outside, one of the hardest things for web app developers to secure is file uploads.

This is another topic we’ll explore further in a future post, however, one good example of the complexity involved is photo uploads. Many apps enable or require users to create profiles that include pictures or avatars. One way to restrict the file type is by accepting only .jpg or .png file extensions. Hackers can sometimes get past this restriction by appending an executable file with a double extension – malware.exe.jpg, for example.

Another problem is that malicious code could be inserted into otherwise legitimate file types such as word documents or spreadsheets. For many apps, however, it’s absolutely necessary to allow these file types. When we encounter such situations, we often work with the customers and recommend other security measures that allow the app to work as advertised but that also detect and block malware.

Conclusion

As a software engineer by training, one advantage I have in testing web applications is understanding the mindset of the developers working on them. People building apps start with the goal of creating something useful for customers. As time goes on, the team changes or users’ needs change, and sometimes vulnerabilities are left behind. This can happen in expected ways, such as outdated libraries, or unexpected ways, such as missing access control on a mostly unused account type.

At Raxis, we employ a group of experts with diverse experiences and skillsets who will intentionally try to break the app and use it improperly. Having testers who have also developed applications gives us empathy for app creators. Even as we attack their work, we know that we are helping them remediate vulnerabilities and making it possible for them to achieve their application’s purpose.

Want to learn more? Take a look at the first part of our Web Application Penetration Testing discussion.